In an effort to combat deception, Google is testing a digital watermark to detect photos created by artificial intelligence (AI).

SynthID, developed by Google’s AI arm DeepMind, will recognize machine-generated images.

It works by embedding changes to individual pixels in photos, making watermarks undetectable to humans but traceable to computers.

DeepMind, however, stated that it is not “foolproof against extreme image manipulation.”

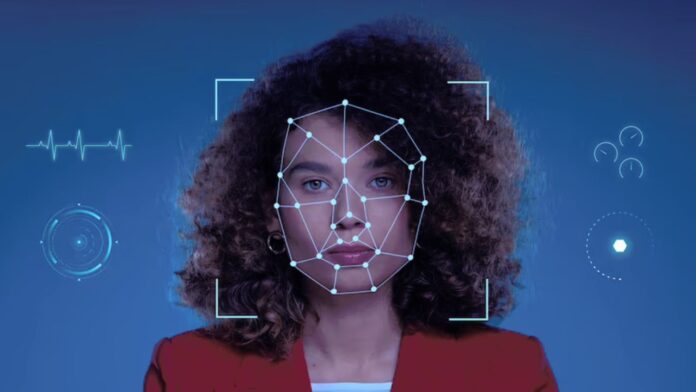

As technology advances, it becomes increasingly difficult to distinguish between real and artificially made visuals, as demonstrated by BBC Bitesize’s AI or Real quiz.

AI picture generators have become widespread, with the popular tool Midjourney boasting over 14.5 million users.

They enable individuals to produce graphics in seconds by inputting basic text instructions, raising concerns about copyright and ownership around the world.

Google has its own image generator called Imagen, and its watermarking mechanism will only apply to photos made with this tool.

Invisible

Typically, a logo or piece of text is applied to an image as a watermark to indicate ownership and, in part, to make it more difficult for the image to be duplicated and used without permission.

It can be found in pictures that are featured on the BBC News website and often have a copyright watermark in the bottom-left corner.

-

A Easy Guide to Understanding AI

However, because they are easily modified or clipped off, these types of watermarks are not suited for recognizing Al-generated photos.

Tech companies utilize a process known as hashing to establish digital “fingerprints” of known recordings of abuse, allowing them to detect and remove them swiftly if they begin to circulate online. However, if the video is cropped or altered, these can also get corrupted.

Google’s technique generates an essentially undetectable watermark, allowing people to use its software to instantaneously determine if the image is real or created by machine.

DeepMind’s chief of research, Pushmeet Kohli, told the BBC that his company’s system transforms images so delicately that “to you and me, to a human, it does not change.”

Unlike hashing, he claims that even if the image is cropped or modified afterwards, the firm’s software can still detect the presence of the watermark.

“You can change the color, the contrast, even the size… [and DeepMind] will still be able to see that it is AI-generated,” he explained.

However, he stressed that this is a “experimental launch” of the system, and that the firm wants people to utilize it in order to learn more about its robustness.

Read More: What Technologies will be in 2023?

Standardisation

In July, Google was one of seven prominent artificial intelligence companies to sign a voluntary agreement in the United States to ensure the safe development and use of AI, which included ensuring that users can detect computer-generated photos by installing watermarks.

Mr Kohli stated that this step represented such pledges, but Claire Leibowicz of the campaign group Partnership on AI stated that more collaboration across firms is required.

“I believe standardization would be beneficial to the field,” she stated.

“Various methods are being pursued; we need to monitor their impact; how can we get better reporting on which are working and for what purpose?”

“A lot of institutions are experimenting with different methods, which adds degrees of complexity, because our information ecosystem relies on different methods for interpreting and disclaiming AI-generated content,” she explained.

Microsoft and Amazon are among the major technology corporations that have vowed to watermark some AI-generated content, similar to Google.

Aside from photos, Meta has issued a research paper for its unreleased video generator Make-A-Video, stating that watermarks will be added to generated films to meet similar transparency criteria for AI-made works.

China outlawed AI-generated photos without watermarks at the beginning of this year, with companies such as Alibaba using them in works developed with its cloud division’s text-to-image tool, Tongyi Wanxiang.

![Technical Aspects of 844 Area Code in 2024 [Detail Guide] 844 Area Code](https://articleify.com/wp-content/uploads/2024/01/844-Area-Code-150x150.jpg)